Why should you care about what AI is or isn’t?

Allow me a moment of transparency before we delve into a topic related to Artificial Intelligence (AI). This article has been hard to write. Despite the complexity of AI as a discipline, its rise in accessibility and popularity means that everyone with half a presence on the World Wide Web has had something to say about it.

There’s a lot of material out there about AI – most of it fascinating! We’ve been reading, observing, taking it all in, experimenting and gaining experience with many of the AI-enabled products out there.

What has made this hard to write is a sense that we don’t want to be late to the party, and we don’t want to sound like we’re talking about something we don’t know much about (we do).

We realised that many (perhaps most) of our customers might feel something similar – that there’s this magical thing called AI that sounds uncannily human – and the world is gobbling it all up like a toddler having cake for breakfast.

But what is behind the magic?

And how do we talk about this AI magic?

As daunting as it can be to navigate all the terminology and shorthand, for those exploring the integration of AI-enabled solutions it’s important to understand what AI is and isn’t to make sense of how it can enable your software and business. We’ll briefly look at:

What is AI?

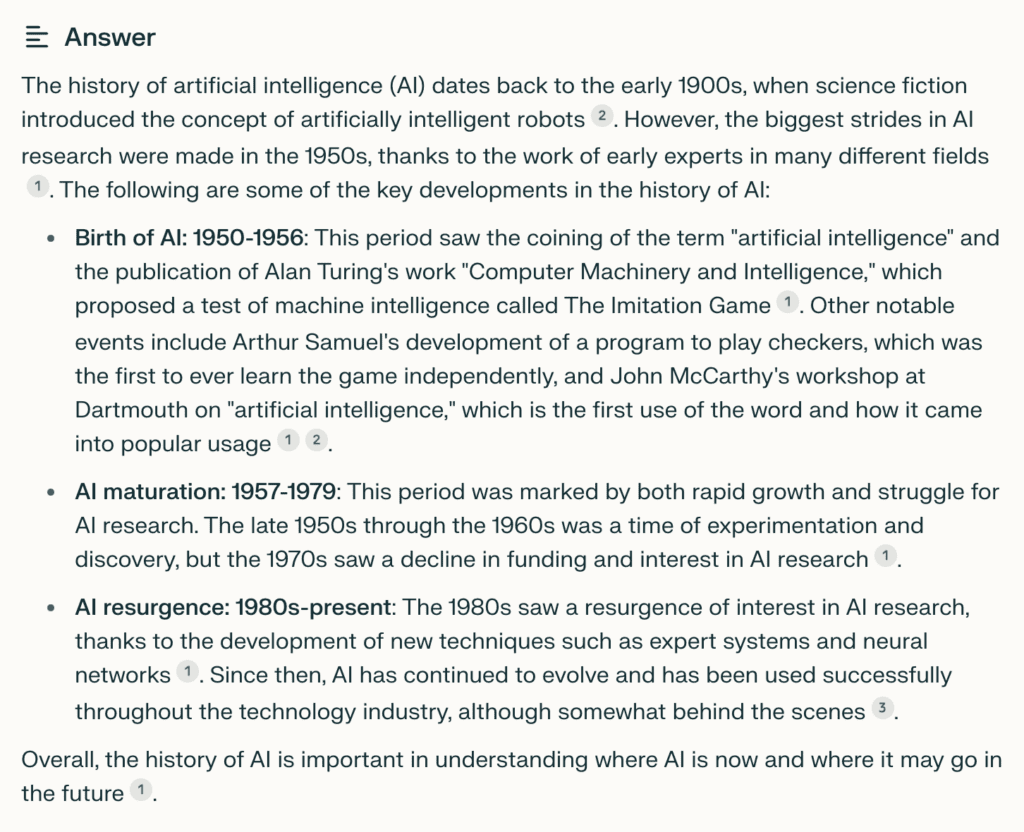

What better way to explore the different aspects of AI than to get a summary from an AI tool? I prompted Perplexity AI for a history of AI. It searched the internet and then (very concisely) summarised the results into a single response:

A notable takeaway from this summary is that AI technology has been around for a long time. The reason for the recent hype, however, lies in the improvement of large language models (LLMs). These models have enabled us to use our natural language to communicate with complex systems the way we would communicate with each other. Sure, using words to interact with computers is not entirely brand new – DOS commands and how people enter questions into Google Search come to mind. However, using natural language has improved dramatically.

The natural language processing capabilities are such that a more conversational tone can now be employed, and the intended meaning remains clear. And so, a whole new way for humans to interact with computers is now possible.

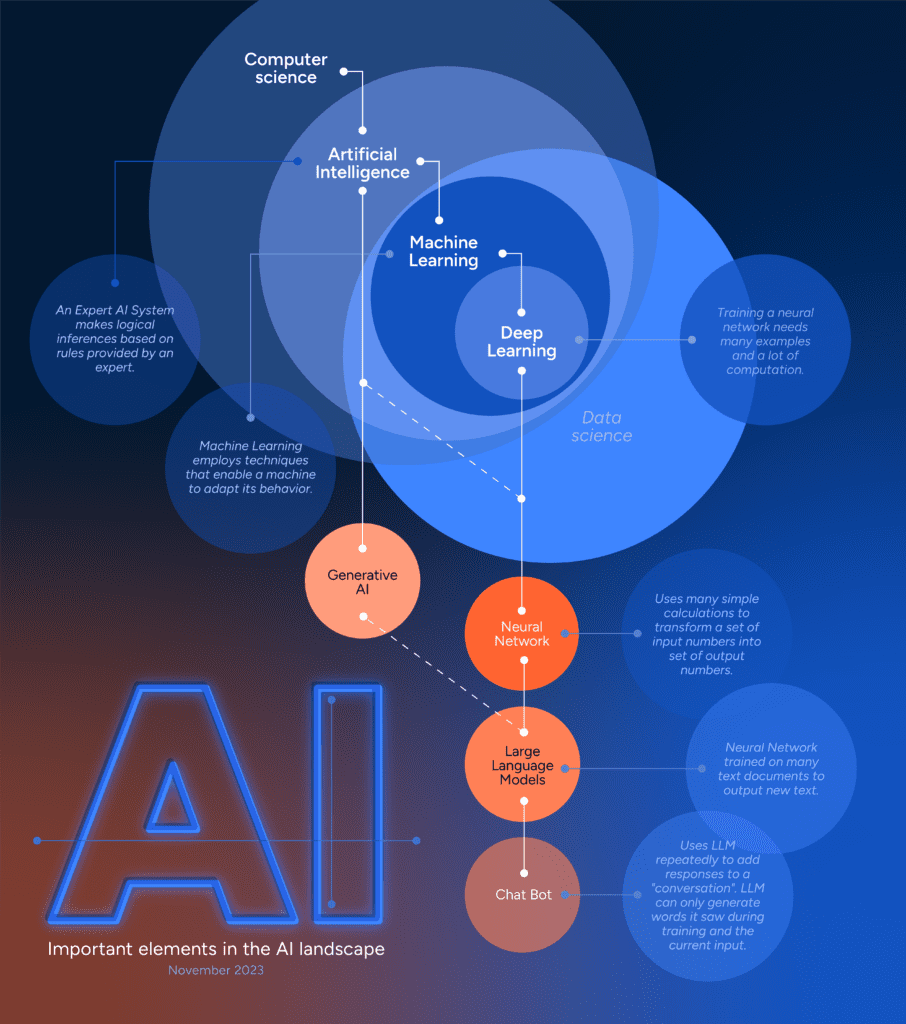

Let’s clarify the elements of AI that are most relevant to us (there are many more) and explore what they are or aren’t:

Machine learning solutions

A machine learning model is trained on large datasets – not just any data, but a large set of example inputs and expected outputs. These datasets can be used to make predictions or decisions based on new data. It can learn from data and improve its performance over time without being explicitly programmed.What machine learning is not – the same as human learning

Some AI systems can learn without supervision (this is called unsupervised learning) and improve over time, but this is not autonomous learning in a human sense. AI’s learning is confined to human-created algorithms and goals. Let’s take traffic patterns as a simple example. If you train a model on past traffic pattern data as input, it could predict future traffic patterns as output. However, this input-output model cannot anticipate traffic disruptions that are not accounted for in its training data. Thus, its learning is reliant on being fed new data. It does not draw its own conclusions from intuition. However, with the slightest whiff of traffic disruption in the original data, it is possible (even likely) that the output prediction will steer (pardon the pun) in that direction. This is what makes it useful for data analysis. It can and does pick up on patterns you did not know was there.Large language models

LLMs can perform a variety of natural language processing tasks. They’re notable for their ability to achieve general-purpose language comprehension and generation. LLMs can comprehend and generate text in a human-like fashion.AI (and LLMs) are assistive, not intuitive

Our products and services are engineered in such a way that we, as humans, can interact with them. Take the example of a TV remote control. If we want to make a thing happen on our TVs, we need to use the interface engineered to communicate with it. For example, I want to switch it on, open my Netflix account, navigate to the search function, type the show’s name using the remote control interface, press enter, wait for it to find the show, and then select the show to start watching. Today, the state of LLMs is such that I can now use natural language to accomplish the same task: say what you want to do, and the interface has the potential to understand the desired intention and act on the command. However, LLMs do not train comprehension, they train getting better at comprehending/giving a sequence of words that humans happen to comprehend and agree with. The intelligence is not entirely artificial – it cannot think for itself. But it can imitate intelligence – a pseudo-intelligence, if you will. This article from Angie Wang has a wonderfully fresh view on this topic – give it a read!“A toddler has a life, and learns language to describe it. An L.L.M. learns language but has no life of its own to describe.”

Generative AI

Generative AI is a type of model that can create new content based on the data it was trained on. It starts with a prompt that could be in the form of text, an image, or any input that the AI system can process. The system then returns new content in response to the prompt. The models therefore generate new content by referring back to the data they have been trained on, making new predictions.

Generative AI outputs are carefully calibrated combinations of the data used to train the algorithms, and the models usually have random elements, which means they can produce a variety of outputs from one input request.

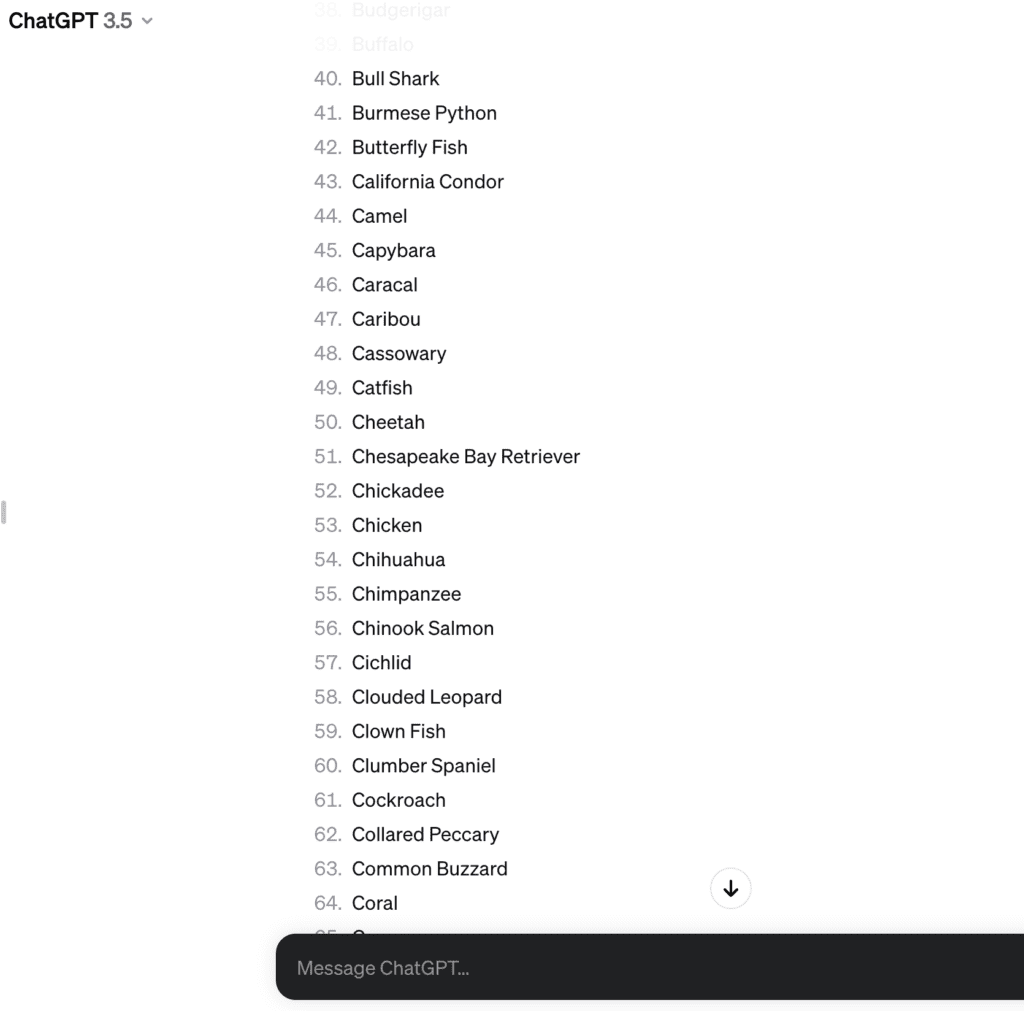

Sometimes, it gets things wrong. Whenever generative AI is asked to generate beyond the capacity of its training data it hallucinates – meaning its outputs are inaccurate. Here’s an example:

I asked ChatGPT 3.5 for an alphabetical list of 100 mammals, and quite a few non-mammalian animals made the cut:

Generative AI needs human understanding to create meaning

AI can produce creative results, like artwork, music, or writing, but it’s not a result of a conscious creative process. Generative AI deep down at the bottom is a dice roll that it interprets in ways humans find interesting.

That said, AI can use the data it’s been fed to create novel combinations of ideas or concepts. This can provide us with the chance to uncover possibilities or perspectives that we may not have considered.

AI and business: Where does the value lie?

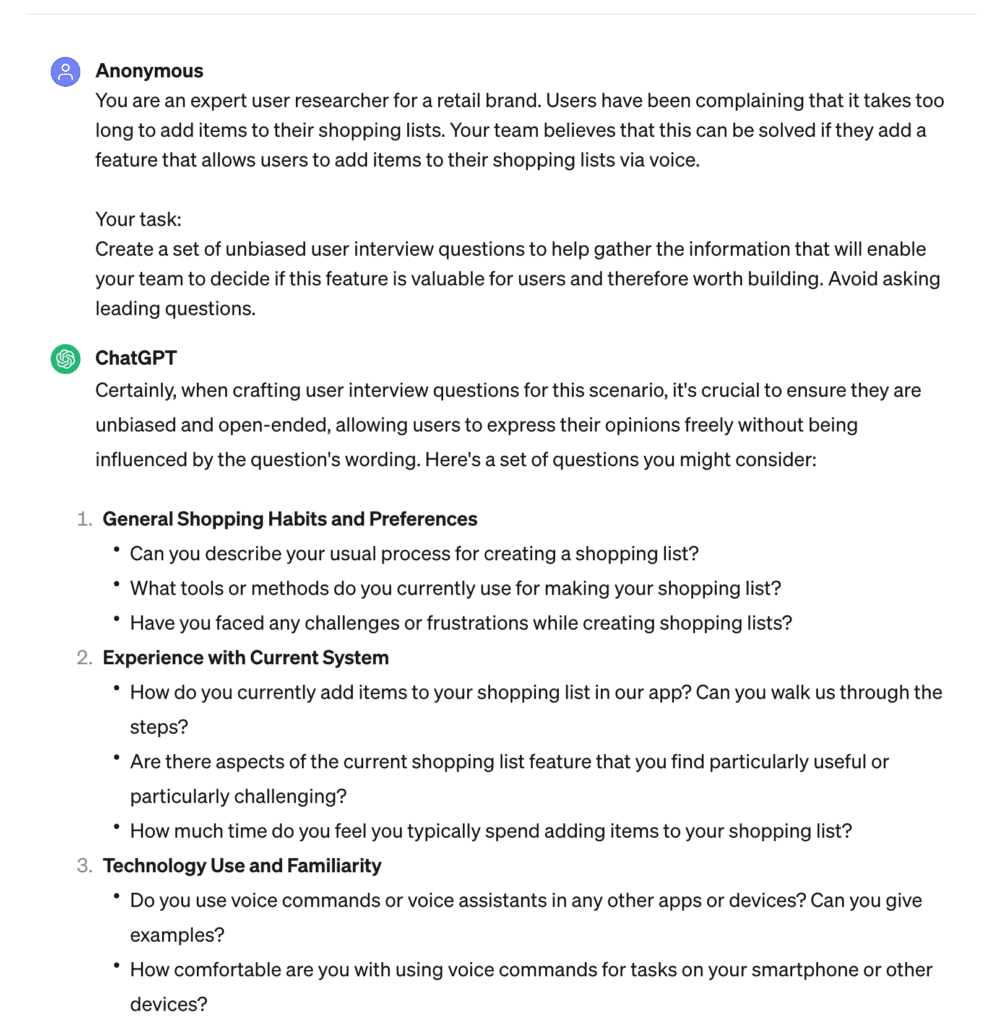

We’ve been exploring the assistive nature of AI and AI-enabled software at Polymorph. To give an example, our User Experience Team has enjoyed the efficiency of using ChatGPT to assist in user research questions. Here’s an example:

"We rarely take the responses as is and run with it, but using ChatGPT in this way helps us get from a blank page to a first draft much quicker than when it’s coming from our own brains."

It is easy to see how the assistive nature of AI can increase productivity in the workplace, and perhaps we’ve barely begun to scratch the surface of its potential.

How can AI and the ability to access your data and business processes via natural language boost your business? AI for business has a plethora of practical uses, but they come with an equal number of limitations and concerns.

We’ll delve into some of the business value of AI in the next part.